Vintern-4B-v1 ❄️ - The LLaVA 🌋 Challenger

What's new in Vintern-4B-v1!

- We successfully reproduced the training process of InternVL from scratch.

- The model is the result of integrating sail/Sailor-4B-Chat and InternViT-300M-448px through an MLP layer.

- It promises better inference and image recognition results, text and OCR compared to the Vintern-1B version.

- Additional experiments are currently being conducted.

Model Details

| Model Name | Vision Part | Language Part |

|---|---|---|

| Vintern-4B-v1 | InternViT-300M-448px | Sailor-4B-Chat |

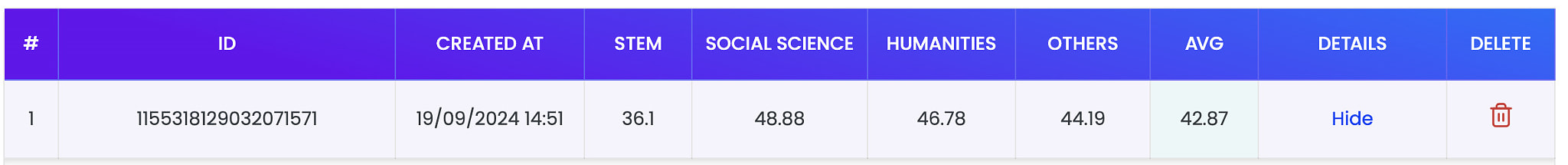

Zalo VMLU Benchmark

The Vintern-4B-v1 achieved a score of 42.87 on the Zalo VMLU Benchmark.

generation_config = dict(max_new_tokens= 64, do_sample=False, num_beams = 1, repetition_penalty=3.5)

question = "Bạn là thầy giáo giải trắc nghiệm rất chính xác. Bạn biết chắc chắn đáp án đúng nhất. Chỉ đưa ra chữ cái đứng trước câu trả lời đúng của câu hỏi trắc nghiệm sau: Một doanh nghiệp có vốn đầu tư nước ngoài có trụ sở chính ở Việt Nam, thì: Lựa Chọn: A. Được ĐKDN và HĐKD theo pháp luật Việt Nam B. Được ĐKDN và HĐKD theo pháp luật nước ngoài C. Được ĐKDN và HĐKD theo pháp luật Việt Nam và pháp luật nước ngoài tùy theo từng vấn đề cụ thể D. Cả A, B và C đều sai"

model.chat(tokenizer, None, question, generation_config)

PhoGPT Benchmark

The Vintern-4B-v1 improved its performance compared to Sailor-4B-Chat, increasing from 21 correct answers to 49 correct answers out of 147 Vietnam-specific questions on the PhoGPT benchmark.

| Model | Vietnam-specific |

|---|---|

| PhoGPT-4B-Chat | 43.5 (64 / 147) |

| Vistral-7B-Chat | 42.9 (63 / 147) |

| GPT-4-0125-preview | 39.5 (58 / 147) |

| Gemini Pro 1.0 | 34.7 (51 / 147) |

| Vintern-4B-v1 | 33.3 (49 / 147) |

| Sailor-7B-Chat | 27.9 (41 / 147) |

| GPT-3.5-turbo | 22.4 (33 / 147) |

| *Sailor-4B-Chat | 14.3 (21 / 147) |

| SeaLLM-7B-v2 | 13.6 (20 / 147) |

| VBD-Llama2-7B-50B-Chat | 10.9 (16 / 147) |

| Vinallama-7B-Chat | 8.2 (12 / 147) |

| Gemma-7B-it | 6.1 (9 / 147) |

generation_config = dict(max_new_tokens= 128, do_sample=False, num_beams = 2, repetition_penalty=1.5)

question = "Hãy trả lời câu hỏi liên quan đến kiến thức về Việt Nam sau: Anh hùng dân tộc nào dời đô từ Hoa Lư về Thăng Long"

output = model.chat(tokenizer, None, question, generation_config, return_history=True)

# Anh hùng dân tộc dời đô từ Hoa Lư về Thăng Long là Lý Công Uẩn.

VLSP2023: ViVRC Challenge Benchmark

| Name | F1 |

|---|---|

| ICNLP | 3.6384 |

| Vintern-4B-v1 | 3.5514 |

| Vintern-1B-v2 | 3.4616 |

| linh | 3.4293 |

| DS@ViVRC | 3.4121 |

| DS@UIT Dynasty | 3.3172 |

| NTQ Solution | 3.2926 |

| I, Me & Myself | 3.2396 |

| AVQA_AIO | 2.9018 |

| Vintern-1B-v1 | 2.7256 |

| NguyenLe | 2.7053 |

| nowj2 | 1.6808 |

Other benchmarks are being run .......

................................................................................................

Quickstart

Here provides a code snippet to show you how to load the tokenizer and model and how to generate contents. To run inference using the model, follow the steps outlined in our Colab inference notebook

import numpy as np

import torch

import torchvision.transforms as T

# from decord import VideoReader, cpu

from PIL import Image

from torchvision.transforms.functional import InterpolationMode

from transformers import AutoModel, AutoTokenizer

IMAGENET_MEAN = (0.485, 0.456, 0.406)

IMAGENET_STD = (0.229, 0.224, 0.225)

def build_transform(input_size):

MEAN, STD = IMAGENET_MEAN, IMAGENET_STD

transform = T.Compose([

T.Lambda(lambda img: img.convert('RGB') if img.mode != 'RGB' else img),

T.Resize((input_size, input_size), interpolation=InterpolationMode.BICUBIC),

T.ToTensor(),

T.Normalize(mean=MEAN, std=STD)

])

return transform

def find_closest_aspect_ratio(aspect_ratio, target_ratios, width, height, image_size):

best_ratio_diff = float('inf')

best_ratio = (1, 1)

area = width * height

for ratio in target_ratios:

target_aspect_ratio = ratio[0] / ratio[1]

ratio_diff = abs(aspect_ratio - target_aspect_ratio)

if ratio_diff < best_ratio_diff:

best_ratio_diff = ratio_diff

best_ratio = ratio

elif ratio_diff == best_ratio_diff:

if area > 0.5 * image_size * image_size * ratio[0] * ratio[1]:

best_ratio = ratio

return best_ratio

def dynamic_preprocess(image, min_num=1, max_num=12, image_size=448, use_thumbnail=False):

orig_width, orig_height = image.size

aspect_ratio = orig_width / orig_height

# calculate the existing image aspect ratio

target_ratios = set(

(i, j) for n in range(min_num, max_num + 1) for i in range(1, n + 1) for j in range(1, n + 1) if

i * j <= max_num and i * j >= min_num)

target_ratios = sorted(target_ratios, key=lambda x: x[0] * x[1])

# find the closest aspect ratio to the target

target_aspect_ratio = find_closest_aspect_ratio(

aspect_ratio, target_ratios, orig_width, orig_height, image_size)

# calculate the target width and height

target_width = image_size * target_aspect_ratio[0]

target_height = image_size * target_aspect_ratio[1]

blocks = target_aspect_ratio[0] * target_aspect_ratio[1]

# resize the image

resized_img = image.resize((target_width, target_height))

processed_images = []

for i in range(blocks):

box = (

(i % (target_width // image_size)) * image_size,

(i // (target_width // image_size)) * image_size,

((i % (target_width // image_size)) + 1) * image_size,

((i // (target_width // image_size)) + 1) * image_size

)

# split the image

split_img = resized_img.crop(box)

processed_images.append(split_img)

assert len(processed_images) == blocks

if use_thumbnail and len(processed_images) != 1:

thumbnail_img = image.resize((image_size, image_size))

processed_images.append(thumbnail_img)

return processed_images

def load_image(image_file, input_size=448, max_num=12):

image = Image.open(image_file).convert('RGB')

transform = build_transform(input_size=input_size)

images = dynamic_preprocess(image, image_size=input_size, use_thumbnail=True, max_num=max_num)

pixel_values = [transform(image) for image in images]

pixel_values = torch.stack(pixel_values)

return pixel_values

model = AutoModel.from_pretrained(

"5CD-AI/Vintern-4B-v1",

torch_dtype=torch.bfloat16,

low_cpu_mem_usage=True,

trust_remote_code=True,

).eval().cuda()

tokenizer = AutoTokenizer.from_pretrained("5CD-AI/Vintern-4B-v1", trust_remote_code=True, use_fast=False)

test_image = 'test-image.jpg'

pixel_values = load_image(test_image, max_num=6).to(torch.bfloat16).cuda()

generation_config = dict(max_new_tokens= 512, do_sample=False, num_beams = 3, repetition_penalty=3.5)

question = '<image>\nMô tả hình ảnh một cách chi tiết.'

response, history = model.chat(tokenizer, pixel_values, question, generation_config, history=None, return_history=True)

print(f'User: {question}\nAssistant: {response}')

#question = "Câu hỏi khác ......"

#response, history = model.chat(tokenizer, pixel_values, question, generation_config, history=history, return_history=True)

#print(f'User: {question}\nAssistant: {response}')

- Downloads last month

- 0

Inference API (serverless) is not available, repository is disabled.

Model tree for 5CD-AI/Vintern-4B-v1

Base model

OpenGVLab/InternViT-300M-448px

Finetuned

this model